Automatic Segmentation of Diabetic foot ulcer from Mask Region-Based Convolutional Neural Networks

Muñoz PL RodrÃguez R Montalvo N

DOI : https://doi.org/10.31546/2633-8653.1006

Download Article : Peer-reviewed Article PDF

Abstract

Diabetic foot ulcer represents one of the leading causes of lower extremity amputation in diabetic patients. The aim of this work is to propose a computational method to carry out segmentation on diabetic foot ulcer images of patients treated with the Heberprot-P. This drug accelerates the wound healing process and reduces the risk of amputation. The used material is a bank of 1176 images provided by the Center for Genetic Engineering and Biotechnology at Havana, and as a method, we propose the use of the model of Mask R-CNN and the concept of learning transfer to automatically locate the region that delimits the ulcer. The proposed model obtained very satisfactory results, and we validated its performance together with specialist physicians, on a set of 1010 images.

Keywords: Learning transfer, Heberprot-P, Mask R-CNN.

Introduction

Diabetes mellitus is a chronic disease that occurs due to an inadequate production of insulin in the human body, or an inappropriate sensitivity of cells of the body to action of insulin. This disease affects a considerable number of the world population [1]. According to the World Health Organization (WHO), in 2014 it was estimated that around 422 million adults suffering from diabetes mellitus, which represented 8.5% of the adult population at global level. For year 2045, WHO hopes that this number should increase and reach the value of 9.9 % [2]. In Latin America and Caribbean, 62 % of population will suffer from diabetes mellitus [3].

Associated complications with diabetes can decrease the patient's quality of life, and even lead to premature death. In United States, diabetes was considered the seventh cause of death in the year 2015, reporting 252,806 deaths associated to this disease [4]. Complications related to this disease if not treated properly include: damage to blood vessels, kidneys and nerves, as well as the high risk of a heart attack or a stroke [1].

Diabetic foot ulcer (DFU) is the leading cause of limb amputations. WHO estimates that 15% of patients with diabetes mellitus suffer of DFU at least once during their lifetime [5]. Each year, many people lose one of their lower limbs due to ulcer infections [6]. This situation also has a significant impact on the economy of countries. For example, in 2012, in United States was estimated an associated health costs with the DFU treatment at $176 billion dollars [7].

In 2016, Brazil reported 97,156 hospital admissions due to DFU, and this number represented a cost to the health system of 264 million dollars [8].

Heberprot-P is a drug developed by researchers from the Center for Genetic Engineering and Biotechnology (CIGB, [9]) for the treatment of complex DFUs [10]. This product accelerates the healing of deep ulcers from repeated infiltrations inside the wound, and decreases the risk of amputation in patients with severe ulcerations. In 2016, Cuba reported 480 amputations due to DFUs from 35,000 patients treated with this drug [11].

The healing process of DFU from the use of Heberprot-P lasts several weeks and, during this period, the specialist pays special attention to reduction of wound size. In this observation period, the specialist takes appropriately photos from ulcer, which allows him to keep a record of the patient's evolution.

In visual inspection, physician analyzes the coloration and texture of skin surrounding the wound, as well as secretions, swellings or sensitivity to touch.

Then, for developing computational methods in order to determine the size of ulcer and perform other morphometric studies, one needs to segment automatically the DFU lesion. Several works have been published at this address [12-15]. For example, Wang et al. proposed a combination of unsupervised techniques and support vector machine (SVM) to determine wound limits [16]. In spite of the obtained results, massive use of this algorithm was virtually impossible due to its high computational cost. Methods based on bio-inspired techniques, deformable contours and clustering algorithms require manually choosing the appropriate parameters for each image, which is not convenient in medical applications by the amount of generated big data [17].

Convolutional neural networks (CNNs) have proven to be a powerful strategy that allows to delimit DFUs with a high degree of accuracy [18-20], and their results are superior to those achieved with other methods already mentioned.

The main problem in this research, which at the same time represents the novelty, is the use of the Region-Based Convolutional Neural Network (Mask R-CNN) in DFU image segmentation (It is important to point out that we did not make innovation to that universal method. Our novelty was to apply this method to DFU image segmentation, of which we have no knowledge of its application in this type of pathology). In work, we use a pre-trained network to carry out subsequently a learning transfer (LT), and we updated the weights of the last layer when training the model with 520 DFU images, which were previously labelled, keeping the weights of remaining layers invariant. We carry out this study on images of diabetic patients treated with Heberprot-P. Table I summarizes a state-of-the-art review of some algorithms used in DFU image segmentation.

In Table I, one can see that Mask-RCNN application to DFU image segmentation does not appear, this being the novelty of this work.

We organized the rest of paper as follows: Section II briefly introduces the main concepts used on CNNs, in particular the Mask-RCNN model and learning transfer technique. Section III exposes the used methodology for DFU segmentation. Section IV contains the obtained results, while section V discusses these results. We describe our conclusions in Section VI.

II. About Convolutional Neural Networks

A. Neural networks

Artificial neural networks or simply neural networks are inspired in biological neural networks. Biological brain in its simplest structure consists of a set of neurons linked by connections, which are responsible for enhancing the electronic pulse that passes through them. The electrical pulse that occurs due to an external stimulus is transmitted from neuron to neuron (a process known as synapses), and this is processed by each neuron to take place finally a network output response; which is encoded as knowledge.

Neural networks are mathematical or computational models, composed of a set of hidden units known as neurons, highly interconnected and capable of computing values from input data. Kumar and Sharma pointed out that these models have two important characteristics [21]:

1.These have a set of adaptive weights or numerical parameters that are adjusted by a learning algorithm.

2.These are able to approximate nonlinear functions.

Therefore, the strength of connections between neurons is represented by adaptive weights, which are activated and modified during the training and prediction phases of the model [22].

B. Region-based Convolutional Neural Network (Mask-RCNN)

In computer vision (CV), instance segmentation refers to the correct detection of all objects in an image, while it is possible to determine the classes to which they belong. This procedure combines two very important tasks: object detection and semantic segmentation. The object detection spatially locates each object in the image, framing them within a bounding box, while semantic segmentation refers to the classification of each pixel of the image into a possible category, given a set of classes without distinguishing between objects belonging to the same class.

Mask R-CNN is a deep neural network that is used to carry out instance segmentation. It is an extension of Fast-CNNs and the same one predicts, proposed by the method, segmentation masks from each region of interest (RoI) [23]. Figure 1 represents architecture of a Fast R-CNN, which takes as input an entire image and a set of object proposals. The network first processes the whole image with several convolutional (conv) and max pooling layers to produce a conv feature map. Then, for each object proposal, a RoI pooling layer extracts a fixed-length feature vector from the feature map. Each feature vector is fed into a sequence of fully connected network (FCN) layers that finally branch into two sibling output layers: one that produces softmax probability estimates over K object classes plus a catchall “background†class and another layer that outputs four real-valued numbers for each of the K object classes. Each set of four values encodes refined bounding-box positions for one of the K classes. Mask R-CNN extends Fast R-CNN [25] by adding a branch for predicting segmentation masks on each RoI, in parallel with the existing branch for classification and bounding box regression. The mask branch is a small FCN applied to each RoI, predicting a segmentation mask in a pixel-to-pixel manner.

Mask R-CNN is simple to implement and train given the Fast R-CNN framework, which facilitates a wide range of flexible architecture designs. Additionally, the mask branch only adds a small computational overhead, enabling a fast system and rapid experimentation [25].

The achieved results by Mask R-CNN in segmentation of common objects in context (COCO) exceeded those obtained by the most prominent state of the art algorithms at that time [24].

C. Training and Learning transfer

During training is defined a multi-task loss on each sampled RoI as L = Lcls + Lbox + Lmask. The classification loss Lcls and bounding-box loss Lbox are identical as those defined in [23]. The mask branch has a Km2- dimensional output for each RoI, which encodes K binary masks of resolution mxm, one for each of the K classes [25], and a per-pixel sigmoid is applied. In addition, one defines Lmask function as the average binary cross-entropy loss. For a RoI associated with ground-truth class k, one defines Lmask on the k-th mask (other mask outputs do not contribute to the loss).

This definition of Lmask allows the network to generate masks for every class without competition among classes; which makes it a powerful tool for DFU image segmentation. This decouples mask and class prediction. This is different from common practice when applying FCNs to semantic segmentation, which typically uses a per-pixel softmax and a multinomial cross-entropy loss. In that case, masks across classes compete; in the case of Mask R-CNN, with a per-pixel sigmoid and a binary loss, they do not. This formulation is key for good instance segmentation results [25].

The designed strategies for detection and classification images achieve their goal from the localization of general characteristics of them, such as borders and shapes. In this sense, one often uses pre-trained models in dissimilar of computer vision tasks as a starting point. Authors know this approach as Learning Transfer (LT).

In is stated that, "LT†is the improvement of learning in a new task, from the transfer of knowledge from a network that has been previously trained" [27]. For example, a model trained initially in order to detect credit cards and transactions, can be used later, from the LT employment, for the detection of bank frauds. The interesting of this way of proceeding is that learning does not need big information volumes; therefore, the time destined for the adjustment of the model is not significantly high. Another advantage of the LT is the possibility to get a better performance of the model as the network adjusts at every step of training.

III. Segmentation of Diabetic Foot Ulcer

A. Obtaining the images

The CIGB of Havana provided images obtained of patients treated with the Heberprot-P at different hospitals in Cuba.

These images show the DFUs at different stages of treatment. In addition, a specialist carried out a manual filtering process on 1176 images to remove duplicates of very poor quality scenes.

B. Labelling of the images

We used the VGG image annotator tool in order to label the obtained images, developed by the Visual Geometry group of the Engineering Science Department at the University of Oxford [29]. We defined two classes of labels in this process: ulcer and image background. We considered objects as an ulcer those where the wound was completely contained, the rest was considered as the background of image. Figure-2 shows an example of labelling process described above with one of images provided by CIGB.

Diabetic foot ulcer represents one of the leading causes of lower extremity amputation in diabetic patients. The aim of this work is to propose a computational method to carry out segmentation on diabetic foot ulcer images of patients treated with the Heberprot-P. This drug accelerates the wound healing process and reduces the risk of amputation. The used material is a bank of 1176 images provided by the Center for Genetic Engineering and Biotechnology at Havana, and as a method, we propose the use of the model of Mask R-CNN and the concept of learning transfer to automatically locate the region that delimits the ulcer. The proposed model obtained very satisfactory results, and we validated its performance together with specialist physicians, on a set of 1010 images.

Keywords: Learning transfer, Heberprot-P, Mask R-CNN.

Introduction

Diabetes mellitus is a chronic disease that occurs due to an inadequate production of insulin in the human body, or an inappropriate sensitivity of cells of the body to action of insulin. This disease affects a considerable number of the world population [1]. According to the World Health Organization (WHO), in 2014 it was estimated that around 422 million adults suffering from diabetes mellitus, which represented 8.5% of the adult population at global level. For year 2045, WHO hopes that this number should increase and reach the value of 9.9 % [2]. In Latin America and Caribbean, 62 % of population will suffer from diabetes mellitus [3].

Associated complications with diabetes can decrease the patient's quality of life, and even lead to premature death. In United States, diabetes was considered the seventh cause of death in the year 2015, reporting 252,806 deaths associated to this disease [4]. Complications related to this disease if not treated properly include: damage to blood vessels, kidneys and nerves, as well as the high risk of a heart attack or a stroke [1].

Diabetic foot ulcer (DFU) is the leading cause of limb amputations. WHO estimates that 15% of patients with diabetes mellitus suffer of DFU at least once during their lifetime [5]. Each year, many people lose one of their lower limbs due to ulcer infections [6]. This situation also has a significant impact on the economy of countries. For example, in 2012, in United States was estimated an associated health costs with the DFU treatment at $176 billion dollars [7].

In 2016, Brazil reported 97,156 hospital admissions due to DFU, and this number represented a cost to the health system of 264 million dollars [8].

Heberprot-P is a drug developed by researchers from the Center for Genetic Engineering and Biotechnology (CIGB, [9]) for the treatment of complex DFUs [10]. This product accelerates the healing of deep ulcers from repeated infiltrations inside the wound, and decreases the risk of amputation in patients with severe ulcerations. In 2016, Cuba reported 480 amputations due to DFUs from 35,000 patients treated with this drug [11].

The healing process of DFU from the use of Heberprot-P lasts several weeks and, during this period, the specialist pays special attention to reduction of wound size. In this observation period, the specialist takes appropriately photos from ulcer, which allows him to keep a record of the patient's evolution.

In visual inspection, physician analyzes the coloration and texture of skin surrounding the wound, as well as secretions, swellings or sensitivity to touch.

Then, for developing computational methods in order to determine the size of ulcer and perform other morphometric studies, one needs to segment automatically the DFU lesion. Several works have been published at this address [12-15]. For example, Wang et al. proposed a combination of unsupervised techniques and support vector machine (SVM) to determine wound limits [16]. In spite of the obtained results, massive use of this algorithm was virtually impossible due to its high computational cost. Methods based on bio-inspired techniques, deformable contours and clustering algorithms require manually choosing the appropriate parameters for each image, which is not convenient in medical applications by the amount of generated big data [17].

Convolutional neural networks (CNNs) have proven to be a powerful strategy that allows to delimit DFUs with a high degree of accuracy [18-20], and their results are superior to those achieved with other methods already mentioned.

The main problem in this research, which at the same time represents the novelty, is the use of the Region-Based Convolutional Neural Network (Mask R-CNN) in DFU image segmentation (It is important to point out that we did not make innovation to that universal method. Our novelty was to apply this method to DFU image segmentation, of which we have no knowledge of its application in this type of pathology). In work, we use a pre-trained network to carry out subsequently a learning transfer (LT), and we updated the weights of the last layer when training the model with 520 DFU images, which were previously labelled, keeping the weights of remaining layers invariant. We carry out this study on images of diabetic patients treated with Heberprot-P. Table I summarizes a state-of-the-art review of some algorithms used in DFU image segmentation.

In Table I, one can see that Mask-RCNN application to DFU image segmentation does not appear, this being the novelty of this work.

We organized the rest of paper as follows: Section II briefly introduces the main concepts used on CNNs, in particular the Mask-RCNN model and learning transfer technique. Section III exposes the used methodology for DFU segmentation. Section IV contains the obtained results, while section V discusses these results. We describe our conclusions in Section VI.

II. About Convolutional Neural Networks

A. Neural networks

Artificial neural networks or simply neural networks are inspired in biological neural networks. Biological brain in its simplest structure consists of a set of neurons linked by connections, which are responsible for enhancing the electronic pulse that passes through them. The electrical pulse that occurs due to an external stimulus is transmitted from neuron to neuron (a process known as synapses), and this is processed by each neuron to take place finally a network output response; which is encoded as knowledge.

Neural networks are mathematical or computational models, composed of a set of hidden units known as neurons, highly interconnected and capable of computing values from input data. Kumar and Sharma pointed out that these models have two important characteristics [21]:

1.These have a set of adaptive weights or numerical parameters that are adjusted by a learning algorithm.

2.These are able to approximate nonlinear functions.

Therefore, the strength of connections between neurons is represented by adaptive weights, which are activated and modified during the training and prediction phases of the model [22].

B. Region-based Convolutional Neural Network (Mask-RCNN)

In computer vision (CV), instance segmentation refers to the correct detection of all objects in an image, while it is possible to determine the classes to which they belong. This procedure combines two very important tasks: object detection and semantic segmentation. The object detection spatially locates each object in the image, framing them within a bounding box, while semantic segmentation refers to the classification of each pixel of the image into a possible category, given a set of classes without distinguishing between objects belonging to the same class.

Mask R-CNN is a deep neural network that is used to carry out instance segmentation. It is an extension of Fast-CNNs and the same one predicts, proposed by the method, segmentation masks from each region of interest (RoI) [23]. Figure 1 represents architecture of a Fast R-CNN, which takes as input an entire image and a set of object proposals. The network first processes the whole image with several convolutional (conv) and max pooling layers to produce a conv feature map. Then, for each object proposal, a RoI pooling layer extracts a fixed-length feature vector from the feature map. Each feature vector is fed into a sequence of fully connected network (FCN) layers that finally branch into two sibling output layers: one that produces softmax probability estimates over K object classes plus a catchall “background†class and another layer that outputs four real-valued numbers for each of the K object classes. Each set of four values encodes refined bounding-box positions for one of the K classes. Mask R-CNN extends Fast R-CNN [25] by adding a branch for predicting segmentation masks on each RoI, in parallel with the existing branch for classification and bounding box regression. The mask branch is a small FCN applied to each RoI, predicting a segmentation mask in a pixel-to-pixel manner.

Mask R-CNN is simple to implement and train given the Fast R-CNN framework, which facilitates a wide range of flexible architecture designs. Additionally, the mask branch only adds a small computational overhead, enabling a fast system and rapid experimentation [25].

The achieved results by Mask R-CNN in segmentation of common objects in context (COCO) exceeded those obtained by the most prominent state of the art algorithms at that time [24].

C. Training and Learning transfer

During training is defined a multi-task loss on each sampled RoI as L = Lcls + Lbox + Lmask. The classification loss Lcls and bounding-box loss Lbox are identical as those defined in [23]. The mask branch has a Km2- dimensional output for each RoI, which encodes K binary masks of resolution mxm, one for each of the K classes [25], and a per-pixel sigmoid is applied. In addition, one defines Lmask function as the average binary cross-entropy loss. For a RoI associated with ground-truth class k, one defines Lmask on the k-th mask (other mask outputs do not contribute to the loss).

This definition of Lmask allows the network to generate masks for every class without competition among classes; which makes it a powerful tool for DFU image segmentation. This decouples mask and class prediction. This is different from common practice when applying FCNs to semantic segmentation, which typically uses a per-pixel softmax and a multinomial cross-entropy loss. In that case, masks across classes compete; in the case of Mask R-CNN, with a per-pixel sigmoid and a binary loss, they do not. This formulation is key for good instance segmentation results [25].

The designed strategies for detection and classification images achieve their goal from the localization of general characteristics of them, such as borders and shapes. In this sense, one often uses pre-trained models in dissimilar of computer vision tasks as a starting point. Authors know this approach as Learning Transfer (LT).

In is stated that, "LT†is the improvement of learning in a new task, from the transfer of knowledge from a network that has been previously trained" [27]. For example, a model trained initially in order to detect credit cards and transactions, can be used later, from the LT employment, for the detection of bank frauds. The interesting of this way of proceeding is that learning does not need big information volumes; therefore, the time destined for the adjustment of the model is not significantly high. Another advantage of the LT is the possibility to get a better performance of the model as the network adjusts at every step of training.

III. Segmentation of Diabetic Foot Ulcer

A. Obtaining the images

The CIGB of Havana provided images obtained of patients treated with the Heberprot-P at different hospitals in Cuba.

These images show the DFUs at different stages of treatment. In addition, a specialist carried out a manual filtering process on 1176 images to remove duplicates of very poor quality scenes.

B. Labelling of the images

We used the VGG image annotator tool in order to label the obtained images, developed by the Visual Geometry group of the Engineering Science Department at the University of Oxford [29]. We defined two classes of labels in this process: ulcer and image background. We considered objects as an ulcer those where the wound was completely contained, the rest was considered as the background of image. Figure-2 shows an example of labelling process described above with one of images provided by CIGB.

C. Model training

We used a Mask R-CNN as basis model for DFU automatic image segmentation, implementing the strategy proposed by Matterport Inc. and under the MIT license (developed by the Massachusetts Institute of Technology (MIT)) [30]. This was an implementation of Mask R-CNN in Python 3, "Keras" and "Tensorflow"[31].

We carried out the learning transfer in a CNN using pre-trained weights on the MSCOCO database [32], and we updated the weights of the last layer when training the model with 520 DFU images, which were previously labelled, keeping the weights of the remaining layers invariant. We used a stochastic descending gradient algorithm, and we performed 200 iterations of training [33]. We run the training phase on a 12 GB NVIDIA Pascal graphics card.

IV. OBTAINED RESULTS

The proposed method for DFU automatic segmentation constituted the base for later design of a software that allow to carry out the study of ulcer healing process in patients treated with the Heberprot-P. The main aim of this research was to obtain a segmentation result as close as possible to the size of lesion, and later perform by the specialist a process of edition, refinement and morphometric analysis.

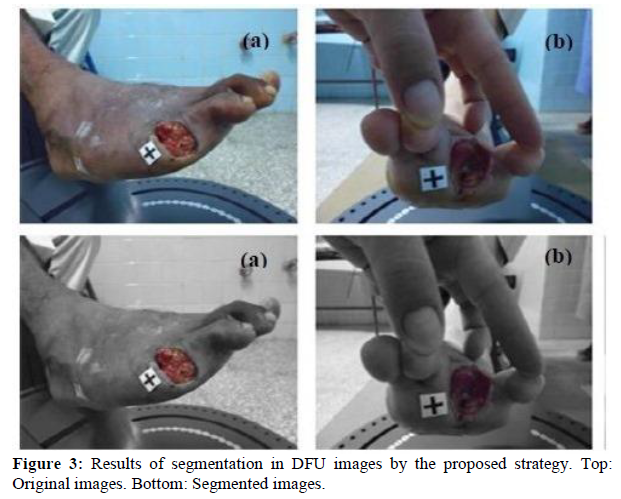

We validate the obtained results from segmentation with 480 images, which we did not previously use in the training. Figure 3 shows the isolation of the DFU in two images; where we represented pixels belonging to background class in grayscale, while in colors those belonging to ulcer. Figures 4, 5, 6 and 7 show other examples of DFU segmentation. We carry out more than 150 DFU image segmentation process by using a Mask R-CNN, which we did not all present here by space problem.

It is important to point out that the medical specialists evaluated these results as satisfactory and encouraging, which is evidenced visually in the results.

Visual comparison, which is one method of qualitative evaluation, is of great importance especially when specialists finally assess the results of segmentation. However, this procedure is very subjective in nature; therefore, we will carry out a quantitative comparison of these results in the next section.

We used a Mask R-CNN as basis model for DFU automatic image segmentation, implementing the strategy proposed by Matterport Inc. and under the MIT license (developed by the Massachusetts Institute of Technology (MIT)) [30]. This was an implementation of Mask R-CNN in Python 3, "Keras" and "Tensorflow"[31].

We carried out the learning transfer in a CNN using pre-trained weights on the MSCOCO database [32], and we updated the weights of the last layer when training the model with 520 DFU images, which were previously labelled, keeping the weights of the remaining layers invariant. We used a stochastic descending gradient algorithm, and we performed 200 iterations of training [33]. We run the training phase on a 12 GB NVIDIA Pascal graphics card.

IV. OBTAINED RESULTS

The proposed method for DFU automatic segmentation constituted the base for later design of a software that allow to carry out the study of ulcer healing process in patients treated with the Heberprot-P. The main aim of this research was to obtain a segmentation result as close as possible to the size of lesion, and later perform by the specialist a process of edition, refinement and morphometric analysis.

We validate the obtained results from segmentation with 480 images, which we did not previously use in the training. Figure 3 shows the isolation of the DFU in two images; where we represented pixels belonging to background class in grayscale, while in colors those belonging to ulcer. Figures 4, 5, 6 and 7 show other examples of DFU segmentation. We carry out more than 150 DFU image segmentation process by using a Mask R-CNN, which we did not all present here by space problem.

It is important to point out that the medical specialists evaluated these results as satisfactory and encouraging, which is evidenced visually in the results.

Visual comparison, which is one method of qualitative evaluation, is of great importance especially when specialists finally assess the results of segmentation. However, this procedure is very subjective in nature; therefore, we will carry out a quantitative comparison of these results in the next section.

V. Discussion Quantitative Comparison of Results

In former section, one can see the obtained good results by using a Mask R-CNN, and can observe that all lesions of DFU were perfectly delimited. It is important to point out that the authors of this paper have no knowledge of use of Mask R-CNN applied to DFU image segmentation. Our strategy uses a transfer of knowledge from a network previously trained, which learns relevant visual features and performs DFU segmentation.

Now, we carry out a quantitative evaluation (and discussion) of the obtained results with our strategy and manually segmented images by using different metrics. Later, we will compare and contrast the results of study with published works in the literature.

Many times, the motivation of biomedical image segmentation is to automate the manual segmentation. For that reason, perhaps the more important is to compare the segmentation results with manual segmentation (considered as ground truth). In varied occasions, manual segmentation gives the best and most reliable results when identifying structures that will be analysed by the specialist. In addition, and due in general to the lack of ground truth, an effective quantitative evaluation of a segmentation method is difficult of achieving. This task continues being an open problem [38]. Therefore, quantitative comparison with manual segmentation may provide a useful indication [39].

Thus, for this research an angiology specialist outlined DFU injury regions (for a group of 70), of which for reasons of space we only will show four of them, and we considered them as trues. Figure 8 shows the images.

Table II lists the results of comparisons of manually segmented images with those obtained by the proposed strategy. We used as metrics of evaluation: Accuracy, Sensitivity, Precision, Specificity and F-Measure [38], [40]. In this Table, one can see (in the first four rows) the numerical comparison of manually outlined images (see Figure 8) with those obtained by using the segmentation method with Mask R-CNN. Two other numeric comparisons are also exposed.

One can see in Table II the high percents obtained in the used metrics in order to evaluate quantitatively the effectiveness (compared with the manually segmented images) of segmentation algorithm by using Mask R-CNN. In particular, one can note the percents in the metrics of Sensitivity and Specificity, which many authors consider important performance measures in medical images [40]. These scores show that in all cases the Mask R-CNN method has good behavior in DFU image segmentation, which is in line with what one can visually appreciate in these Figures. In other applications, many authors have already highlighted the potential of Mask R-CNN as a segmentation tool [25,41].

Another work reported in [20] for DFU segmentation takes cropped images as inputs and provides pixel-wise probabilities of wound segment masks as outputs, and final masks are obtained by setting a threshold of 0.5 on every pixel. We with our strategy do not use any threshold; these strategies use the CNN at different way. However, both methods were able to isolate DFU.

In [18], they introduced a dataset of 705 foot images, and provided the ground truth of ulcer region and the surrounding skin, very similar to the strategy followed in this work with regard to supply the call true image. In addition, they also proposed a two-tier transfer learning from bigger datasets to train the CNN, and automatically segment the ulcer and surrounding skin. The difference of this work with our strategy is that they used fully convolutional networks (FCNs) for DFU segmentation. However, one can note (see paper) that the results of both works were very similar, since were able to segment, properly, the DFU. In addition, both papers used metric very similar to carry out the quantitative evaluation. In [18], it is demonstrated the potential of FCNs in DFU segmentation, which can be further improved with a larger dataset.

In [14] is used a classical method to segment DFU. They used a particle swarm optimization (PSO) based in optimization technique to DFU segmentation. The obtained results with our strategy were very superior to the classical technique.

They concluded that future works should be mainly addressed to improve the accuracy of tissue segmentation and classification.

VI. Conclusions

In this work, we trained a Mask R-CNN algorithm to discriminate lesions on DFU images, which was designed for

object detection and segmentation of natural instances. We also carried out a qualitative evaluation (performed by an angiology specialist), and they showed great satisfaction with the obtained results. Later, we performed a quantitative comparison of the achieved results with the Mask R-CNN algorithm and manually segmented images. We found, in the classification of DFU lesions, high values in the used metrics, which showed a good performance of this strategy. We should emphasize that for the training of network, we taken images of DFUs without performing any pre-processing; i.e., we processed them in their natural state. In future work, we will carry out a comparison of the obtained results here when using Mask R-CNN with other architectures of CNNs.

Consent for Publication: Written informed consent was obtained from the patient for the publication of this case report.

Conflicts of Interest: The authors declare no conflict of interest.

References:

1.World Health Organization et al. Global report on diabetes. World Health Organization. 2016.

2.World Health Organization. 2017.

3.Seguel G. Por qué debemos preocuparnos del pie diabético?. Importancia del pie diabetic. Revista médica de Chile. 2013;141:1464-1469.

4.C. for Disease Control, Prevention et al. “National diabetes statistics report, 2017,†Atlanta, GA: Centers for Disease Control and Prevention. US Dept of Health and Human Services. 2017.

5.Yazdanpanah L, Nasiri M, Adarvishi S. Literature review on the management of diabetic foot ulcer. World J Diabetes. 2015;6:37-53.

6.Armstrong DG, Lavery LA, Harkless LB. Validation of a diabetic wound classification system: the contribution of depth, infection, and ischemia to risk of amputation. Diabetes Care.1998;21:855-859.

7.Everett E, Mathioudakis N. Update on management of diabetic foot ulcers. Ann N Y Acad Sci. 2018; 1411:153-165.

8.Sánchez-Cruz LY, MartÃnez-Villarreal AA, Lozano-Platonoff A, et al. EpidemiologÃa de las úlceras cutáneas en latino américa. Medicina Cutánea Ibero-Latino-Americana. 2017;44:183-197.

9.Center for Genetic Engineering and Biotechnology (CIGB). Cuba. 2018.

10.Berlanga J, Fernández JI, López E, et al. Heberprot-p: a novel product for treating advanced diabetic foot ulcer. MEDICC Rev. 2013;15:11-15.

11.Heberprot-p: Center for Genetic Engineering and Biotechnology (CIGB), 2018.

12.Suresh A, Lavanya S. Analysis of segmentation techniques on foot ulcer images. Intl J Adv Res In Sci Eng. 2014;3:262-271.

13.Saratha M, Mohana Priya V. Detection of Diabetic Wounds Based on Segmentation Using Accelerated Mean Shift Algorithm. Intl J Adv Res Comput Sci Softw Eng. 2016;6:201-206.

14.Babu KS, Sukanta S, Nithya DK. Efficient Detection and Classification of Diabetic Foot Ulcer Tissue using PSO Technique. Intl J Eng Tech. 2018;7:1006-1010.

15.Keerthika A, Sangeetha G, JayaBharathi C, Pavithra S. Prediction of Diabetic Foot Ulcer based on Region growth segmentation. Intl J Pure Appl Math. 2018;119:643-651.

16.Wang L, Pedersen PC, Agu E, Strong DM, Tulu B. Area determination of diabetic Foot Ulcer images using a cascaded two-stage SVM-based classification. IEEE Trans Biomed Eng. 2017;64:2098-2109.

17.Yadav MK, Manohar DD, Mukherjee G, Chakraborty C. Segmentation of chronic wound areas by clustering techniques using selected color space. J Med Imag Health Inform. 2013;3:22-29.

18.Goyal M, Yap MH, Reeves ND, et al. Fully convolutional networks for diabetic foot ulcer segmentation. IEEE Int Conf Syst Man Cybern. 2017:618-623.

19.Manu G, Neil DR, Satyan R, et al. Fully Convolutional Networks for Diabetic Foot Ulcer Segmentation. ArXiv: 2017;1:1708.01928.

20.Changhan W, Xinchen Y, Max S, et al. A Unified Framework for Automatic Wound Segmentation and Analysis with Deep Convolutional Neural Networks. Conf Proc IEEE Eng Med Biol Soc. 2015;2015:2415-2418.

21.Kumar EP, Sharma EP. Artificial neural networks-a study. Intl J Emerg Eng Res Tech. 2014;2:143-148.

22.Jimenez F, Sistemas de software basados en Redes Neuronales (Inteligencia Artificial Avanzada) y el Estado del Arte de la TecnologÃa Global. Neuromorphic Technologies.2011.

23.Ren S, He K, Girshick R, Sun J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell. 2015;91-99.

24.Lin TY, Maire M, Belongie S, et al. Microsoft COCO: Common objects in context,†in European conference on computer vision. European Conference on Computer Vision- ECCV. 2014:740-755.

25.He K, Gkioxari G, Dollar P, Girshick R. Mask RCNN. in Computer Vision (ICCV). IEEE International Conference on Computer Vision (ICCV). 2017;2980-2988.

26.RodrÃguez RM, Sossa JHA, Antonio GT. Procesamiento y Análisis Digital de Imágenes. Libro publicado por Editorial “Ra-Ma®†ISBN: 978-84-9964-007-8, Impreso en España, Mayo 2011.

27.Olivas ES. Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques. IGI Publishing. 2009.

28.Wu Z, Zhang Y, Yu F, Xiao J. A GPU implementation of GoogleNet. Technical Report. 2014:6.

29.Visual Geometry group of the Engineering Science Department at the University of Oxford, 2015.

30.Abdulla W. Mask R-CNN for object detection and instance segmentation on keras and tensorflow. 2017.

31.Keras and Tensorflow. 2017.

32.MSCOCO database, 2017.

33.Bottou L. Large-Scale Machine Learning with Stochastic Gradient Descent. Y. Lechevallier, G. Saporta (eds.), Proceedings of COMPSTAT'2010. Springer-Verlag Berlin Heidelberg, 2010;177-186.

34.Desai R, Bhatt B. Review of Classification Techniques of Foot Ulcer Detection. Intl J Innov Adv Comput Sci. 2015;4.

35.Aishwarya V, Chandralekha R, Sarika R, et al. Diabetic Foot Ulcer assessment through the aid of smart phone. Intl J Comput Eng Appl. 2016;10:53-57.

36.Babu KS, Sukanta S, Nithya DK. Efficient Detection and Classification of Diabetic Foot Ulcer Tissue using PSO Technique. Intl J Eng Tech. 2018;7:1006-1010.

37.Manu G, Reeves ND, et al. Robust Methods for Real-time Diabetic Foot Ulcer Detection and Localization on Mobile Devices. IEEE J Biomed Health Inform. 2019;23:1730-1741.

38.Chaki N, Saeed K, Shaikh, SH. Exploring Image Binarization Techniques. Studies in Computational Intelligence.©Springer India. 2014.

39.RodrÃguez, R, Sossa, JH. (2019) Mathematical Techniques for Biomedical Image Segmentation.

In R. Narayan (Ed.), Encyclopedia of Biomedical Engineering. Elsevier. 2019;3:64-78.

40.Goyal M, Reeves ND, Rajbhandari S, et al. Recognition of Ischaemia and Infection in Diabetic Foot Ulcers: Dataset and Techniques. ArXiv: 1908.05317v1 [eess.IV], Aug 2019.

41.Simon R, Siems J. Faster Training of Mask R-CNN by Focusing on Instance Boundaries. Comput Vis Image Underst. 2019;188:102795.

In former section, one can see the obtained good results by using a Mask R-CNN, and can observe that all lesions of DFU were perfectly delimited. It is important to point out that the authors of this paper have no knowledge of use of Mask R-CNN applied to DFU image segmentation. Our strategy uses a transfer of knowledge from a network previously trained, which learns relevant visual features and performs DFU segmentation.

Now, we carry out a quantitative evaluation (and discussion) of the obtained results with our strategy and manually segmented images by using different metrics. Later, we will compare and contrast the results of study with published works in the literature.

Many times, the motivation of biomedical image segmentation is to automate the manual segmentation. For that reason, perhaps the more important is to compare the segmentation results with manual segmentation (considered as ground truth). In varied occasions, manual segmentation gives the best and most reliable results when identifying structures that will be analysed by the specialist. In addition, and due in general to the lack of ground truth, an effective quantitative evaluation of a segmentation method is difficult of achieving. This task continues being an open problem [38]. Therefore, quantitative comparison with manual segmentation may provide a useful indication [39].

Thus, for this research an angiology specialist outlined DFU injury regions (for a group of 70), of which for reasons of space we only will show four of them, and we considered them as trues. Figure 8 shows the images.

Table II lists the results of comparisons of manually segmented images with those obtained by the proposed strategy. We used as metrics of evaluation: Accuracy, Sensitivity, Precision, Specificity and F-Measure [38], [40]. In this Table, one can see (in the first four rows) the numerical comparison of manually outlined images (see Figure 8) with those obtained by using the segmentation method with Mask R-CNN. Two other numeric comparisons are also exposed.

One can see in Table II the high percents obtained in the used metrics in order to evaluate quantitatively the effectiveness (compared with the manually segmented images) of segmentation algorithm by using Mask R-CNN. In particular, one can note the percents in the metrics of Sensitivity and Specificity, which many authors consider important performance measures in medical images [40]. These scores show that in all cases the Mask R-CNN method has good behavior in DFU image segmentation, which is in line with what one can visually appreciate in these Figures. In other applications, many authors have already highlighted the potential of Mask R-CNN as a segmentation tool [25,41].

Another work reported in [20] for DFU segmentation takes cropped images as inputs and provides pixel-wise probabilities of wound segment masks as outputs, and final masks are obtained by setting a threshold of 0.5 on every pixel. We with our strategy do not use any threshold; these strategies use the CNN at different way. However, both methods were able to isolate DFU.

In [18], they introduced a dataset of 705 foot images, and provided the ground truth of ulcer region and the surrounding skin, very similar to the strategy followed in this work with regard to supply the call true image. In addition, they also proposed a two-tier transfer learning from bigger datasets to train the CNN, and automatically segment the ulcer and surrounding skin. The difference of this work with our strategy is that they used fully convolutional networks (FCNs) for DFU segmentation. However, one can note (see paper) that the results of both works were very similar, since were able to segment, properly, the DFU. In addition, both papers used metric very similar to carry out the quantitative evaluation. In [18], it is demonstrated the potential of FCNs in DFU segmentation, which can be further improved with a larger dataset.

In [14] is used a classical method to segment DFU. They used a particle swarm optimization (PSO) based in optimization technique to DFU segmentation. The obtained results with our strategy were very superior to the classical technique.

They concluded that future works should be mainly addressed to improve the accuracy of tissue segmentation and classification.

VI. Conclusions

In this work, we trained a Mask R-CNN algorithm to discriminate lesions on DFU images, which was designed for

object detection and segmentation of natural instances. We also carried out a qualitative evaluation (performed by an angiology specialist), and they showed great satisfaction with the obtained results. Later, we performed a quantitative comparison of the achieved results with the Mask R-CNN algorithm and manually segmented images. We found, in the classification of DFU lesions, high values in the used metrics, which showed a good performance of this strategy. We should emphasize that for the training of network, we taken images of DFUs without performing any pre-processing; i.e., we processed them in their natural state. In future work, we will carry out a comparison of the obtained results here when using Mask R-CNN with other architectures of CNNs.

Consent for Publication: Written informed consent was obtained from the patient for the publication of this case report.

Conflicts of Interest: The authors declare no conflict of interest.

References:

1.World Health Organization et al. Global report on diabetes. World Health Organization. 2016.

2.World Health Organization. 2017.

3.Seguel G. Por qué debemos preocuparnos del pie diabético?. Importancia del pie diabetic. Revista médica de Chile. 2013;141:1464-1469.

4.C. for Disease Control, Prevention et al. “National diabetes statistics report, 2017,†Atlanta, GA: Centers for Disease Control and Prevention. US Dept of Health and Human Services. 2017.

5.Yazdanpanah L, Nasiri M, Adarvishi S. Literature review on the management of diabetic foot ulcer. World J Diabetes. 2015;6:37-53.

6.Armstrong DG, Lavery LA, Harkless LB. Validation of a diabetic wound classification system: the contribution of depth, infection, and ischemia to risk of amputation. Diabetes Care.1998;21:855-859.

7.Everett E, Mathioudakis N. Update on management of diabetic foot ulcers. Ann N Y Acad Sci. 2018; 1411:153-165.

8.Sánchez-Cruz LY, MartÃnez-Villarreal AA, Lozano-Platonoff A, et al. EpidemiologÃa de las úlceras cutáneas en latino américa. Medicina Cutánea Ibero-Latino-Americana. 2017;44:183-197.

9.Center for Genetic Engineering and Biotechnology (CIGB). Cuba. 2018.

10.Berlanga J, Fernández JI, López E, et al. Heberprot-p: a novel product for treating advanced diabetic foot ulcer. MEDICC Rev. 2013;15:11-15.

11.Heberprot-p: Center for Genetic Engineering and Biotechnology (CIGB), 2018.

12.Suresh A, Lavanya S. Analysis of segmentation techniques on foot ulcer images. Intl J Adv Res In Sci Eng. 2014;3:262-271.

13.Saratha M, Mohana Priya V. Detection of Diabetic Wounds Based on Segmentation Using Accelerated Mean Shift Algorithm. Intl J Adv Res Comput Sci Softw Eng. 2016;6:201-206.

14.Babu KS, Sukanta S, Nithya DK. Efficient Detection and Classification of Diabetic Foot Ulcer Tissue using PSO Technique. Intl J Eng Tech. 2018;7:1006-1010.

15.Keerthika A, Sangeetha G, JayaBharathi C, Pavithra S. Prediction of Diabetic Foot Ulcer based on Region growth segmentation. Intl J Pure Appl Math. 2018;119:643-651.

16.Wang L, Pedersen PC, Agu E, Strong DM, Tulu B. Area determination of diabetic Foot Ulcer images using a cascaded two-stage SVM-based classification. IEEE Trans Biomed Eng. 2017;64:2098-2109.

17.Yadav MK, Manohar DD, Mukherjee G, Chakraborty C. Segmentation of chronic wound areas by clustering techniques using selected color space. J Med Imag Health Inform. 2013;3:22-29.

18.Goyal M, Yap MH, Reeves ND, et al. Fully convolutional networks for diabetic foot ulcer segmentation. IEEE Int Conf Syst Man Cybern. 2017:618-623.

19.Manu G, Neil DR, Satyan R, et al. Fully Convolutional Networks for Diabetic Foot Ulcer Segmentation. ArXiv: 2017;1:1708.01928.

20.Changhan W, Xinchen Y, Max S, et al. A Unified Framework for Automatic Wound Segmentation and Analysis with Deep Convolutional Neural Networks. Conf Proc IEEE Eng Med Biol Soc. 2015;2015:2415-2418.

21.Kumar EP, Sharma EP. Artificial neural networks-a study. Intl J Emerg Eng Res Tech. 2014;2:143-148.

22.Jimenez F, Sistemas de software basados en Redes Neuronales (Inteligencia Artificial Avanzada) y el Estado del Arte de la TecnologÃa Global. Neuromorphic Technologies.2011.

23.Ren S, He K, Girshick R, Sun J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell. 2015;91-99.

24.Lin TY, Maire M, Belongie S, et al. Microsoft COCO: Common objects in context,†in European conference on computer vision. European Conference on Computer Vision- ECCV. 2014:740-755.

25.He K, Gkioxari G, Dollar P, Girshick R. Mask RCNN. in Computer Vision (ICCV). IEEE International Conference on Computer Vision (ICCV). 2017;2980-2988.

26.RodrÃguez RM, Sossa JHA, Antonio GT. Procesamiento y Análisis Digital de Imágenes. Libro publicado por Editorial “Ra-Ma®†ISBN: 978-84-9964-007-8, Impreso en España, Mayo 2011.

27.Olivas ES. Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques. IGI Publishing. 2009.

28.Wu Z, Zhang Y, Yu F, Xiao J. A GPU implementation of GoogleNet. Technical Report. 2014:6.

29.Visual Geometry group of the Engineering Science Department at the University of Oxford, 2015.

30.Abdulla W. Mask R-CNN for object detection and instance segmentation on keras and tensorflow. 2017.

31.Keras and Tensorflow. 2017.

32.MSCOCO database, 2017.

33.Bottou L. Large-Scale Machine Learning with Stochastic Gradient Descent. Y. Lechevallier, G. Saporta (eds.), Proceedings of COMPSTAT'2010. Springer-Verlag Berlin Heidelberg, 2010;177-186.

34.Desai R, Bhatt B. Review of Classification Techniques of Foot Ulcer Detection. Intl J Innov Adv Comput Sci. 2015;4.

35.Aishwarya V, Chandralekha R, Sarika R, et al. Diabetic Foot Ulcer assessment through the aid of smart phone. Intl J Comput Eng Appl. 2016;10:53-57.

36.Babu KS, Sukanta S, Nithya DK. Efficient Detection and Classification of Diabetic Foot Ulcer Tissue using PSO Technique. Intl J Eng Tech. 2018;7:1006-1010.

37.Manu G, Reeves ND, et al. Robust Methods for Real-time Diabetic Foot Ulcer Detection and Localization on Mobile Devices. IEEE J Biomed Health Inform. 2019;23:1730-1741.

38.Chaki N, Saeed K, Shaikh, SH. Exploring Image Binarization Techniques. Studies in Computational Intelligence.©Springer India. 2014.

39.RodrÃguez, R, Sossa, JH. (2019) Mathematical Techniques for Biomedical Image Segmentation.

In R. Narayan (Ed.), Encyclopedia of Biomedical Engineering. Elsevier. 2019;3:64-78.

40.Goyal M, Reeves ND, Rajbhandari S, et al. Recognition of Ischaemia and Infection in Diabetic Foot Ulcers: Dataset and Techniques. ArXiv: 1908.05317v1 [eess.IV], Aug 2019.

41.Simon R, Siems J. Faster Training of Mask R-CNN by Focusing on Instance Boundaries. Comput Vis Image Underst. 2019;188:102795.

© 2018 medicalpressopenaccess.com. All rights reserved.